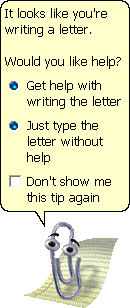

Meet Evil Cliddy

Virtual Assistants have become quite common benefactors. What if an assistant could be evil? How far would you be willing to interact or react to it? Will it degrade, enhance, or even define the user experience?

Creating Cliddy has been a significant driver of my motivations and ideas. This simple character that will serve as the base for my next drawing app has been a source of fun in recent days.

It is a throwback to the days where virtual assistants were displayed with an avatar without "skills" or "actions" or whatever Siri now has. An agent to be used as a narrative controller of a story that I am not sure where will lead me.

The more I work on it, the more the idea of making "products" on top of real characters grows in me, up to the point where I am starting to believe that Cliddy can not only be the main central point of the whole UI, but also the focus and target of the entire project.

Like Clippy, the old Microsoft Office assistant, my Cliddy is initially intended to be helpful, communicative, and an enhancer of what can be done with the tool being presented to the user. A natural bridge from the product owner's mind into the product user interactions and intentions. Except "Shape the Pixel" is not a product. It is meant to be tool with personality, a daydream of sorts with all its degrees of madness.

A large spectrum

All app assistants are typically well-intended. Some are even sugar-coated to the extreme (Hey Siri, how's it going?). Some are not that sugar-coated and prefer a more dehydrated, linter type list of suggestions that show up in some modal or sidebar to help or guide you. Regardless of the style of virtual-assistant, the well-intended part is a common assumption.

But what if it isn't? What if, in my case, Cliddy is instead an arrogant, pretentious prick? A real gem of an asshole? (like me, aha) What if Cliddy could be a nagging evil character that is just there to purposefully annoy and alienate you? Would you still use a product or a tool with such an agent? How would it "assist" if it is actively fighting against the user? (an assistant with a marked personality, like the Star Wars robots - yes, I am watching The Mandalorian).

Maybe when centering the UI of a tool around a character/assistant, it can be possible to detach it from its attitude. What if the benefits of having an assistant are not so much about it being helpful but more about having an intermediary human-like presence in the UI? (an anthropomorphized UI element - even if it happens to be symbolically evil). Will the active presence of some agent on the UI by itself outweigh its direct helpfulness? Will it make the app more engaging and fun to use? I don't know the answers to these questions. I also don't propose answering them formally, but I will explore them and create stuff that might serve as propositions to these hypotheses. Or not Ahah :D

Meet Cliddy Evil Brother

The eyes are slightly cut, and it can now speak (maybe in the future it will tell "Yo Momma" jokes 😂)

💩